Poisson Distribution

Published:

This post covers Introduction to probability from Statistics for Engineers and Scientists by William Navidi.

Basic Ideas

The Poisson Distribution

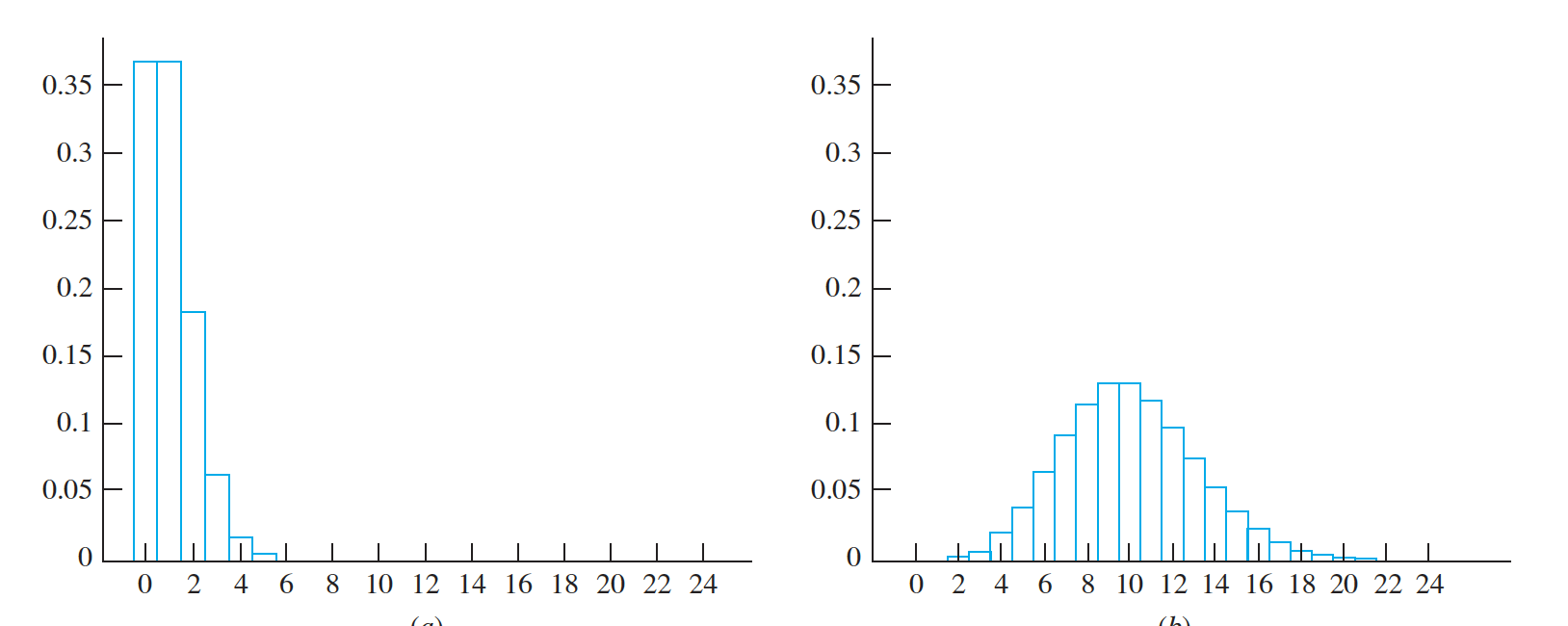

Poisson distribution is as an approximation to the binomial distribution when $n$ is large

and $p$ is small

A mass contains $10,000$ atoms of a radioactive substance. The probability that a given atom will decay in a one-minute time period is $0.0002$. Let $X$ represent the number of atoms that decay in one minute. Now each atom can be thought of as a Bernoulli trial, where success occurs if the atom decays. Thus $X$ is the number of successes in $10,000$ independent Bernoulli trials, each with success probability $0.0002$, so the distribution of $X$ is $Bin(10,000, 0.0002)$. The mean of $X$ is $\mu_X $= $(10,000)(0.0002) = 2$.

Another mass contains $5000$ atoms, and each of these atoms has probability $0.0004$ of decaying in a one-minute time interval. Let $Y$ represent the number of atoms that decay in one minute from this mass. By the reasoning in the previous paragraph,

$Y ∼ Bin(5000, 0.0004) ~ and ~ \mu_Y = (5000)(0.0004) = 2.$

In each of these cases, the number of trials $n$ and the success probability $p$ are different,

but the mean number of successes, which is equal to the product $np$, is the same.

the number of trials $n$ and the success probability $p$ are different, but the mean number of successes, which is equal to the product $np$, is the same.

Now assume that we wanted to compute the probability that exactly three atoms decay in one minute for each of these masses.

Using the binomial probability mass function, we would compute as follows:

$P(X = 3) = \frac {10,000!} {3! ~ 9997! }(0.0002)^3(0.9998)^{9997} = 0.180465091$

$P(Y = 3) = \frac{5000!}{ 3! 4997!} (0.0004)^3(0.9996)^{4997} = 0.180483143$

We can therefore approximate the binomial mass function with a quantity that depends on the product np only.

Specifically,if $n$ is large and p is small, and we let $ \lambda = np $

The variance of a binomial random variable is np(1 − p). Since p is very small, we replace 1 − p with 1

it can be shown by advanced methods that for all $x$,

$\frac{n!}{x!(n − x)!}p^x (1 − p)^{(n−x)} ≈ e^{−\lambda} \frac {\lambda ^{x}}{x!}$

If $X \sim Poisson(\lambda)$, then

- X is a discrete random variable whose possible values are the non-negative integers. The parameter $\lambda$ is a positive constant. The probability mass function of $X$ is

\(p(x) = P(X = x) = \left\{\begin{array}{ll} e^{−\lambda} \frac{\lambda^x} {x!} & \mbox{if}~ x~ is~ a~ non-negative~ integer \\ 0 & otherwise \end{array}\right.\) ■ The Poisson probability mass function is very close to the binomial probability mass function when $n$ is large, $p$ is small, and $\lambda = np$.

- Particles (e.g., yeast cells) are suspended in a liquid medium at a concentration of $10$ particles per mL. A large volume of the suspension is thoroughly agitated, and then $1$ mL is withdrawn. What is the probability that exactly eight particles are withdrawn.

- Particles are suspended in a liquid medium at a concentration of $6$ particles per mL. A large volume of the suspension is thoroughly agitated, and then $3 mL$ are withdrawn. What is the probability that exactly $15$ particles are withdrawn?

The Mean and Variance of a Poisson Random Variable

If $X \sim Poisson(\lambda)$, then the mean and variance of $X$ are given by

$𝜇_{X} = 𝜆 $ and $𝜎^2_{X} = 𝜆$

Using the Poisson Distribution to Estimate a Rate

Let $\lambda$ denote the mean number of events that occur in one unit of time or space.

Let $X$ denote the number of events that are observed to occur in $t$ units of time

or space. Then if $X ∼ Poisson(\lambda t)$ , $\lambda$ is estimated with $ \hat{\lambda} = X∕t.$

Uncertainty in the Estimated Rate

The bias is the difference $\hat{\mu} ∼ \lambda$. Since. $ \hat{\lambda} = X∕t$, it follows from $\mu_{aX} = a\mu_{X}$ that

$ \hat{\mu} = \mu_{\frac{X}{t}} = \frac {\mu_X} {t}$

$= \frac{𝜆t}{t}= 𝜆$

Since $\hat{\mu} = 𝜆$, $ \hat{\lambda}$ is unbiased.

The uncertainty is the standard deviation $\hat{\sigma_{𝜆}}$. Since $ \hat{\lambda}= X∕t$, it follows from $\sigma_{aX} = a\sigma_{X}$ that $\hat {\sigma_{\lambda}} = \frac{\sigma_{X}}{t}$. Since $X ∼ Poisson(𝜆t)$, it follows from () that $\sigma_{X} = \sqrt{ \lambda t}$. Therefore $\hat {\sigma_{\lambda}}= \frac{\sigma_{X}}{ t }= \frac{\sqrt{ 𝜆t}}{ t} = \sqrt{\frac{ 𝜆} t}$.

In practice, the value of $\lambda$ is unknown, so we approximate it with $\hat{\lambda}$.

A suspension contains particles at an unknown concentration of $\lambda$ per mL. The suspension is thoroughly agitated, and then $4$ mL are withdrawn and $17$ particles are counted. Estimate $\lambda$.

Derivation of the Mean and Variance of a Poisson Random Variable

Let $X ∼ Poisson(\lambda)$. We will show that $\mu_X = \lambda$ and $\sigma^2_X = \lambda$. Using the definition of

population mean for a discrete random variable:

$\mu_X = \sum_\limits{x}{} (P(X=x)$

$= \sum_\limits{x}{} xe^{−\lambda} \frac{\lambda^{x}}{ x!}$

$= 0e^{−\lambda} \frac{\lambda^{0}}{ 0!} + \sum_\limits{x=1}{} xe^{−\lambda} \frac{\lambda^{x}}{ x!}$

$= \sum_\limits{x=1}{} e^{−\lambda} \frac{\lambda^{x}}{ (x-1)!}$

$= \lambda \sum_\limits{x=1}{} e^{−\lambda} \frac{\lambda^{x-1}}{ (x-1)!}$

$= \lambda \sum_\limits{x=0}{} e^{−\lambda} \frac{\lambda^{x}}{ (x)!}$

$ \sum_\limits{x=0}{} e^{−\lambda} \frac{\lambda^{x}}{ (x)!}$ is the sum of the $Poisson(\lambda)$ probability mass function over all its possible values. Therefore $ \sum_\limits{x=0}{} e^{−\lambda} \frac{\lambda^{x}}{ (x)!} = 1$

$\mu_X = \lambda$

The Geometric Distribution

Assume that a sequence of independent Bernoulli trials is conducted, each with the same success probability $p$. Let $X$ represent the number of trials up to and including the first success. Then $X$ is a discrete random variable, which is said to have the geometric distribution with parameter $p$. We write $X \sim Geom(p)$.

If $X \sim Geom(p)$, then the probability mass function of $X$ is

\(p(x) = P(X = x) = \left\{ \begin{array}{ll} p^{x}(1 − p)^{x−1} & x = 1,2 \ldots \\ 0 & otherwise \end{array} \right.\)

A large industrial firm allows a discount on any invoice that is paid within $30$ days. Twenty percent of invoices receive the discount. Invoices are audited one by one. Let $X$ be the number of invoices audited up to and including the first one that qualifies for a discount. What is the distribution of $X$? Find $P(X = 3)$.

The Mean and Variance of a Geometric Distribution

If $X ∼ Geom(p)$, then

$𝜇_{X} = \frac{1}{p} $

$\sigma^{2}_{X} = \frac{1-p}{p}$

The Negative Binomial Distribution

If $X \sim NB(r, p)$, then the probability mass function of $X$ is

\(p(x) = P(X = x) = \left\{ \begin{array}{ll} {(x − 1) \choose (r − 1) } p^{r}(1 − p)^{x−r} & x = r, r + 1,\ldots\\ 0 & otherwise \end{array} \right.\)A Negative Binomial Random Variable Is a Sum of Geometric Random Variables

If $X \sim NB(r, p)$, then $X = Y1 +\ldots+ Yr$ where $Y_{1},\ldots, Y_{r}$ are independent random variables, each with the $Geom(p)$ distribution.

In a large industrial firm, $20\%$ of all invoices receive a discount. Invoices are audited one by one. Let X denote the number of invoices up to and including the third one that qualifies for a discount. What is the distribution of $X$? Find $P(X = 8)$.

The Mean and Variance of the Negative Binomial Distribution

- If $X \sim NB(r, p)$, then

- $𝜇_{X} = rp$

- $\sigma_{X} = \frac{rp}{(1-p)^2}$

- If $X \sim NB(r, p)$, then

A Negative Binomial Random Variable Is a Sum of Geometric Random Variables

- Assume that a sequence of $8$ independent Bernoulli trials, each with success probability $p$, comes out as follows:

$F ~ F ~ S ~ F ~ S ~ F ~ F ~ S$

If $X$ is the number of trials up to and including the third success, then $X ∼ NB(3, p)$, and for this sequence of trials, $X = 8$.

- Denote the number of trials up to and including the first success by $Y_1$. For this sequence, $Y_1 = 3$, but in general, $Y_1 ∼ Geom(p)$. Now count the number of trials, starting with the first trial after the first success, up to and including the second success.

- Denote this number of trials by $Y_2$. For this sequence $Y_2 = 2$, but in general, $Y_2 ∼ Geom(p)$. Finally, count the number of trials, beginning from the first trial after the second success, up to and including the third success.

- Denote this numberof trials by $Y_3$. For this sequence $Y_3 = 3$, but again in general, $Y3 ∼ Geom(p)$. It is clear that $X = Y_1 + Y_2 + Y_3$. Furthermore, since the trials are independent, $Y_1, Y_2, and ~ Y_3$ are independent. This shows that if $X ∼ NB(3, p)$, then $X $ is the sum of three independent $Geom(p)$ random variables.

- This result can be generalized to any positive integer $r$.